Sensitive data analysis platform¶

The Sensitive data analysis platform or SAPU is an environment provided by the University of Tartu High-Performance Computing Centre, where analysts and programmers can work on sensitive data. The environment reduces the risk of possible unauthorized copy, transfer, or retrieval of sensitive data from the machines, providing a higher class of security than that of a standard high-performance cluster.

Overview¶

SAPU is an isolated environment where:

- The machine has no access to the outside world.

- Complete network isolation based on firewall rules.

- Access to the machine is possible only through a virtual desktop environment.

- One can't use standard

linuxtools to move files.- Analysts can move files using object storage, which saves anything moved.

- Moving files out requires approval from the data owners' side.

- The monitoring layer and the server record all actions taken.

The following roles exist in SAPU:

| Role | Permissions | Notes |

|---|---|---|

| Cloud Operators | Admin privileges everywhere. | The UTHPC centre fills this role by taking care of the security, monitoring and operational tasks, making sure the machines work. |

| Data Owners | Direct access to the machine and monitoring. | This role consists of people who own the data. They provide analyzable data. |

| Data Custodians | Direct access to the machine and monitoring. | Technical representatives of the data owners - responsible for technical tasks, helping cloud operators and analysts. |

| Data Analysts | Only virtual desktop access. | People who work with the data. |

Requesting a project¶

A data owner can request a new SAPU machine by writing to support@hpc.ut.ee with a list of their requirements.

The list of requirements should contain the following

- The name of the project - this will also become the machine's name.

- Duration of the project, during which at least the storage is preserved.

- List of users, divided into their roles.

- Contact information for data owners, custodians and analysts.

- Necessary CPU, memory and disk resources.

- List of necessary software to be set up beforehand.

Afterwards, the UTHPC creates a virtual machine with the necessary resources and software. Then, data owners and/or custodians move the necessary data into the machine, after which analysts get access to the machine.

The machine keeps running, incurring resource charges until a request for pausing comes from someone related to the project. Then, only storage incurs a cost.

Operations¶

This paragraph explains and walks through some frequent tasks related to SAPU.

Data analyst access¶

Data analysts receive their credentials through a previously agreed-upon method. This method depends on where the data analyst is from and whether they're associated with the University of Tartu. Possible ways of sharing:

| Method | Subject | Notes |

|---|---|---|

| UTHPC Vault . | People associated with University of Tartu. | |

| GNU Privacy Guard (GPG) encryption. | Anyone with GPG keys. | Requires some technical knowledge. |

| Estonian ID-card encryption. | People with Estonian ID card. | Requires a working ID-card together with PIN codes. |

| Two random channels. | Anyone. | Data analysts receive encrypted file through one channel, and the encryption password through another. |

Note

SAPU username/password and object storage username/password are not synchronized. Changing the password in SAPU does not automatically change it in object storage, and vice versa.

Logging in¶

After receiving the credentials, an analyst can log in through the Virtual Desktop website desktop.sapu.hpc.ut.ee . After login, the machines which the analyst can access should be visible under the ’ALL CONNECTIONS’ tab. Clicking on it brings the user to the graphical virtual desktop, where they need to insert the same username and password again.

If the analyst has access to only one machine, they're directly put into the virtual desktop.

Accessing guacamole settings¶

The settings tab opens with the keyboard combination Ctrl+Alt+Shift, which brings up a side menu. It's used to access other hosts, settings, or the clipboard.

Changing the password¶

Data analysts can change their passwords by going to the identity server when inside University of Tartu internal network - either by utilizing the VPN or EduRoam WIFI. If this isn't possible, you can also change password while logged into a server, by using the passwd command line command.

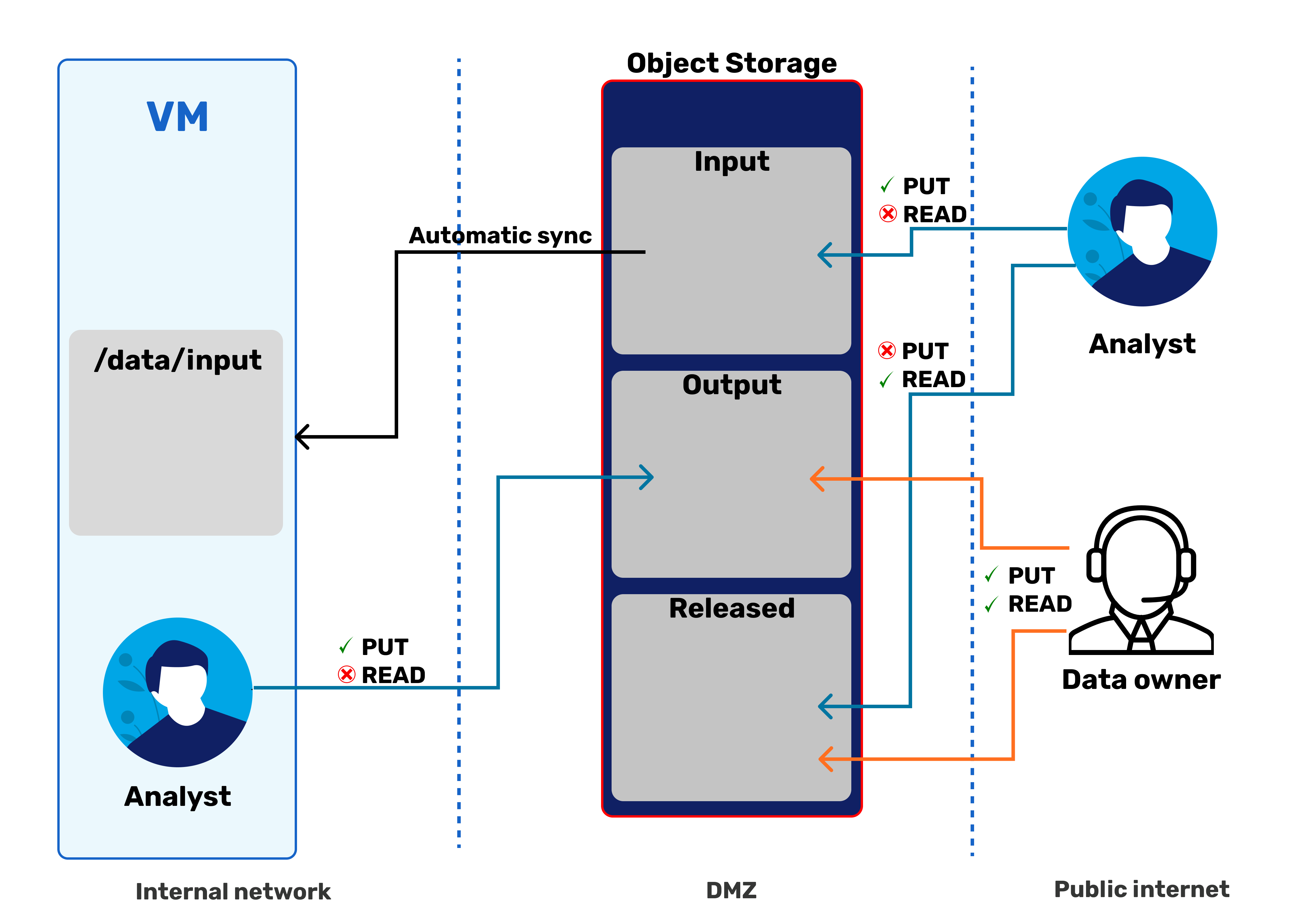

Object storage¶

If an analyst has requested a data transfer and this is also allowed, credentials for object storage are also sent to the analysts. One can access object storage at object.hpc.ut.ee . Upon login, one sees the list of buckets in the format of <project_name>.sapu.hpc.ut.ee.

Browsing inside reveals three folders - input, output and released.

<project>.sapu.hpc.ut.ee/

├── input #(1)

├── output #(2)

└── released #(3)

- Data analysts can read and write into this folder; the SAPU machine synchronizes this folder to

/data/input. - Data analysts can't read nor write this folder; the SAPU machine can write into it.

- Data analysts can only read from this folder; the SAPU machine doesn't use this.

-

- The

inputfolder is for moving files into SAPU from the outside world. - The

outputfolder is for requesting moving files from SAPU to the outside world. - The

releasedfolder is from where a data analyst can download the approved output files.

- The

Moving data into SAPU¶

You can move data into SAPU with the input folder in object storage. After uploading the file via the mc command line tool or WEB UI, the SAPU machine automatically downloads the file into the /data/input folder on the SAPU machine. The synchronization might take a bit, depending on the file size, but small files should move in under a minute.

Moving data out¶

Moving data out of SAPU has two phases. First, a analyst moves the file into the /data/output inside the SAPU machine, which then gets synchronized into the object storage /data/output folder.

After moving the file, the data analyst notifies either the data owners or data custodians, who inspect the files. If the files meet the data protection, security, and policy standards, the data owner or data custodian moves files to the released folder in object storage, where data analysts can download the files from the outside world.